News & Insights

Adapting to AI: what 6 months of website analytics tells us about the future

What’s happening to our website reflects a bigger trend across open access content; fewer people are visiting our website but more might be reading our content than ever before. AI is changing how people access and apply knowledge – including in the legal world. This post explains what that looks like, what it means for organisations like ours, and what we’re doing in response.

Something unexpected is happening on our website – and it reflects a much bigger shift in how information is accessed online.

In the first half of 2025, visits to our website dropped by more than 50% compared to the same period last year. That might sound like a crisis – but at the same time, we saw a sharp rise in visits from AI systems: bots and autonomous agents used by large tech companies to collect, process, and use our content.

This suggests that more people may be engaging with our legal content than ever before – just not through us directly.

As an organisation that exists to drive change through open content, this shift is both exciting and challenging. It prompts big questions about how we reach our audience, demonstrate impact, and stay connected to the humans we serve.

New AI tools are probably replacing traditional website visits

During the first 6 months of 2025 around 40,300 people visited our website, in comparison to the first 6 months of 2024, which saw 83,600 people.

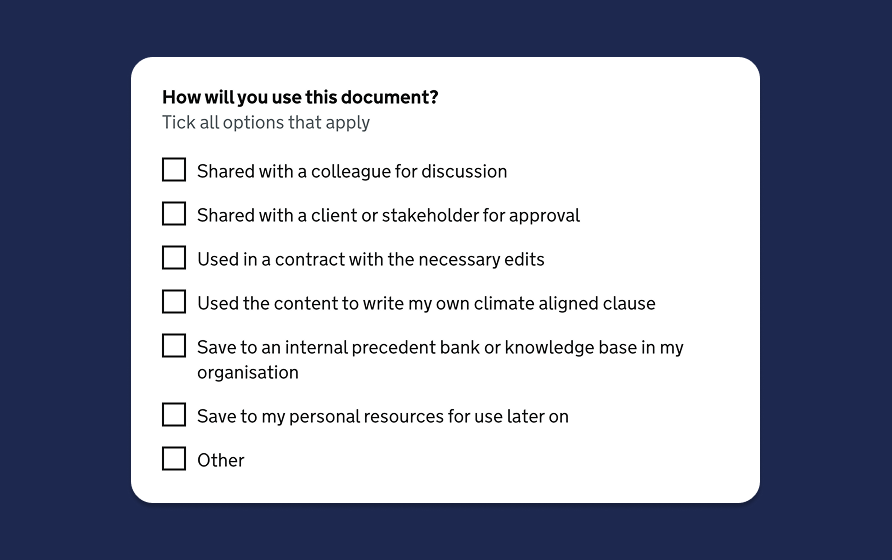

Website visitors between Jan and Jul: 2024 vs 2025

We think this 52% drop could be caused by AI tools replacing traditional website visits. For example, we know that lawyers and professionals are increasingly using tools like ChatGPT or Harvey to access information and generate content. As our content also finds its way into LLMs (more on this below), users are most likely accessing and using it via these new AI tools without ever having to visit our website directly. This means we’re potentially reaching more people than ever – but we can’t see them. And they can’t always see us too.

There’s a new type of visitor to our website

Somewhere in between December and January, we started to notice a change in visitor activity on our website. We saw a large increase in number of pages being viewed by each visitor, but also a drop in visiting time.

Website visitor behaviour: Sep 2024 to Apr 2025

The visualised data, with overlapping trends, highlights a shift in behaviour: users are accessing significantly more pages in far less time. This spike in activity is characteristic of automated tools, not human browsing.

Since spotting this, we used some monitoring tools on our website to better understand this activity and discovered that on average 15% of our visitors are now agents or bots; an autonomous software programme from technology companies, used to harvest and process content.

71,600

Visits from bots and agents between May and July 2025

15%

Of all website traffic came from bots and agents, between May and July 2025

197

Humans referred to by LLMs between May and July 2025

But who are these bots, and what exactly are they doing on our site?

Who’s behind the bots and agents

Comfortingly (or tediously) predictable, every one of these bots hails from the dominant players in AI and tech.

Companies owning common bots and agents visiting our website: May to Jul 2025

Those familiar with the current legal AI landscape may be surprised to see such large chunks attributed to a few, big name AI and tech companies rather than there being evidence of lots of smaller bots for legal AI startups. However, almost all legal AI companies are built on top of an existing LLM, like OpenAI’s GPT-4. This reliance has two implications:

- The AI and tech companies that will be accessing our content are predictable. And that’s a good thing. We can learn their preferred structures and work to properly service that, having cascading impacts on all the smaller legal AI companies built on top of their model.

- It can be difficult to know where exactly our information is ending up, even if we know who the original scraper was. Still, we can do some inference via the names of different scraping agents (for example, see the discussion of ChatGPT-User below)

What the bots and agents are doing on our website

By analysing the different bots and agents that are responsible for ~71,600 visits to our website from between 19 May and 19 July 2025, we start to get an idea of why there’re here and what they’re doing.

Common tasks performed by bots and agents visiting our website: May to Jul 2025

These bots and agents do the following on our website:

- Harvesting content to train AI models (36.3%): this involves collecting large amounts of domain-specific data (ie, our clauses) with the intention to improve or train AI models. Despite having existing training databases, there is value in consistently updating content such that it reflects the current internet (and, therefore, the modern world). This activity is no surprise to us as our content is original, high quality and domain specific – something OpenAI is clearly focussing on. Our content is also free and has no barriers to access, which is unusual for the legal sector.

- Crawling and indexing content (33.2%): this involves reading our content to make it searchable or referenceable by other platforms such as search engines or AI-powered search. This is a normal activity that happens on most websites.

- Auditing and assessing search presence (17.3%): this involves checking our website’s health, performance, and backlinks. It’s usually an automated process from platforms that provide competitive analysis or marketing insights. This is a standard activity that happens on most authoritative websites.

- AI tools performing tasks on behalf of users (9.2%): these tools interact directly with users or assist with tasks (they sometimes have a conversational interface like ChatGPT). This is a new kind of activity and very interesting for us (see below).

- Monitoring and intelligence gathering (4.1%): this involves reading content for specific mentions and trends to gather timely insights. This is a normal activity that happens on most authoritative websites.

So what does this mean for our mission?

The ChatGPT-User bot: is this a signal that our content is safe, trusted and used?

The “ChatGPT-User” bot is the second most active bot on our website, responsible for about 9% of all bot traffic. That’s significant, because when this bot visits it suggests our content is being treated as safe and trustworthy.

According to OpenAI, the ChatGPT-User bot is deployed during user searches and uses Microsoft’s Bing API to filter for reliable and accurate sources. It’s designed to avoid misleading or problematic content, which means appearing in these searches is a signal of credibility.

However, it’s not just individual users behind the traffic from the ChatGPT-User bot. Searches performed by this bot are also triggered when users create custom GPTs and other tools built using OpenAI’s models. For example, in the legal world, platforms like Lexis+ AI, Spellbook, Harvey, and Thomson Reuters Co-Counsel are all using OpenAI models in their tech stack to power AI-driven features. OpenAI hasn’t confirmed exactly which bots it uses in it’s model development pipeline, but since the process relies heavily on search data, it’s likely that the ChatGPT-User bot is one of them. So when we see visits from the ChatGPT-User bot, we believe it likely means our content is being used to power a growing number of Legal AI tools.

For us, this is a promising indicator of impact as it shows that our content is not only trusted by leading model providers, but is also helping shape the answers and recommendations being delivered to legal professionals through these tools.

Why AI access supports – and challenges – our mission

We want our content to reach as many people as possible, that’s why it’s free, open, and designed for reuse. So in many ways, seeing our legal clauses and resources used by AI systems is a sign that our mission is working.

When our content is pulled into large language models (LLMs) – the AI systems behind tools like Lexis+ AI, Spellbook, Harvey, CoCounsel – it becomes accessible to a much wider audience of people using those platforms to write, negotiate, or understand contracts. That’s powerful because these users may never have found our website or heard of our organisation, yet we can shape how they think about climate and responsibility.

But there’s a catch.

AI systems often repurpose content without attribution, transparency, or feedback. We don’t know how it’s being interpreted, or how it’s being used. We can’t correct errors or update outdated versions. And we lose the direct connection with the users – the lawyers, advisors, and change-makers – we’re here to support.

This is the double-edged nature of AI: it extends our reach but weakens our visibility. That’s why we’re taking steps to minimise the risk of our content being misrepresented or distorted by AI tools by rethinking how we distribute and license our content for AI access.

As website traffic declines, our story of impact evolves

As a non-profit, being able to demonstrate the reach and impact of our open access content is essential. It shows whether our work is making a meaningful difference and helps strengthen funders’ confidence in our mission. At the moment, a large part of our impact reporting is based on data from how people interact with our website. But as website visits decline, so does the volume of data we can draw on. That means we have less evidence to show our impact – and fewer insights into how our content is performing, how people are engaging with it, and where we can improve.

However, while the decline in website traffic does reduce the volume of traditional analytics we can rely on, this doesn’t mean our impact is disappearing – just that it’s becoming harder to see using old methods. As our recent work with NPC highlights, when direct measurement becomes difficult the solution isn’t to give up – it’s to get smarter about how we explain and evidence our work.

We’re not alone with these challenges

We’re not alone in facing these challenges. Many organisations – particularly publishers, non-profits, and open access platforms – are grappling with the same shift: a move away from traditional website visits toward content being consumed indirectly, often through syndication or use within other platforms and AI tools. This broader trend has been well documented, and it’s forcing a fundamental rethink of how visibility, engagement, and impact are measured online.

There’s growing evidence that this pattern is affecting other high-quality, open-access websites. For example, Software Heritage – a non-profit with a mission aligned to ours in preserving and providing access to open source code – has also reported a sharp increase in visits from scraping bots. Their experience highlights the same underlying tension: when your mission is to make valuable content freely available, automated access can both support that mission and complicate how you manage, track, and fund it.

How we’re responding

Deliver and structure content so that machines can read and use it efficiently

We’re going to be looking into developing some hidden technical infrastructure that helps machines get access to our legal content (API) and make sense of it (Knowledge Graph).

- Access: Develop an API to make our legal content accessible to machines on demand. This is like a menu that machines can query to get exactly what they need. So long as technology platforms discover and use it, it will also reduce the burden of crawlers manually harvesting content as they can simply collect the latest versions of content they need.

- Meaning: Develop a Knowledge Graph to make our legal content understandable and logically navigable by machines. This adds semantic context, helping machines understand what things mean and how they relate.

By structuring our content in these machine-readable ways, we can make it easier for AI tools and modern legal tech platforms to access and use. This approach not only reduces the risk of our content being misrepresented or misunderstood, but also makes the process of collecting and using it more efficient, including from an energy perspective.

Develop a new approach to licensing and attribution of our legal content

We’re developing simple, practical ground rules for how machines can interact with our content – and how they should handle it. This will involve two complementary approaches:

- Legal: We’ll publish clear, accessible guidelines for automated tools, crawlers, and AI systems. These will cover content harvesting, permissions, attribution, and responsible use. While these aren’t legally binding, they signal our expectations and establish a clear position for others to follow.

- Technical:

- C2PA provenance tagging – embedding a digital “nutrition label” into our content that confirm its origin, authorship, and any modifications over time.

- Robots.txt and HTTP headers – making smarter use of these tools to guide how bots and crawlers access and index our site.

Broaden our web analytics to include non-human traffic

As more AI tools and automated agents interact with our content, we need to expand our analytics remit to track not just human users but also machines. This means continuing to monitor bot and agent traffic to our website – accepting it not just as noise, but as a meaningful signal of reach and relevance.

As we develop and launch our API, we’ll also put in place systems to monitor usage there: tracking request volumes, endpoints accessed and (where possible) the types of applications making those calls.

Together, these machine interactions are becoming a key way our content is accessed, applied, and repurposed – and should be recognised as a vital part of how we understand and measure our broader impact.

Have more conversations with real users

While the first few areas are largely digital, this piece is more human. Without detailed analytics to show us what users are doing and how they’re using our work, we’ll need to put more focus on speaking directly to people and understanding their needs. Initiatives like the Climate Clauses Working Group give us a valuable opportunity to hear from users firsthand.

Develop new ways to show our impact

With the decline in website traffic and data from traditional analytics, we’ll be focussing on three things to build a broader picture of our impact:

- Strengthening our theory of change – to make the logic of our impact clearer and more compelling, even when direct data is limited.

- Targeting the evidence that matters most – such as how our content is being used by legal AI tools, cited by professionals, or embedded in legal workflows – even if these are harder to track.

- Improving qualitative feedback and targeted insights – such as through case studies, user stories and deeper engagement with specific partners or audiences.

This isn’t about lowering our standard, but adapting them to fit a changing digital landscape where impact is real, but increasingly indirect. With this approach we can continue to show our content is influencing practice and policy, even as the way people access it evolves.

New challenges, bigger opportunities

The way people discover and use open legal content is changing fast. While this brings new challenges for how we track impact, it also opens new doors – allowing our work to reach wider audiences through the tools they already use. By building the right infrastructure and listening closely to our community, we can shape how legal AI evolves – and ensure climate-aligned contracting is part of its foundation.

Tired of prompting?

Getting climate into your contracts is easier with a community of peers helping you take action. Join the Climate Clauses Working Group, who meet for 1 hour, once a month. All sessions take place remotely, using Zoom.